Overview

Continuum is a SaaS platform that enables secure AI model processing on confidential data using end-to-end encryption and confidential computing environments. For you as an end user, this means that your prompts to the AI, like "tell a joke," remain private. The same applies to the AI's responses. Private in this context means "inaccessible to anyone but yourself."

As part of the Continuum service, users can access its capabilities through the ai.confidential.cloud domain. This domain not only serves the Continuum API but also provides a secure, ChatGPT-like web interface for quickly trying out and interacting with Continuum. This overview explains the platform's key functionalities, emphasizing its security features and integration capabilities with existing AI workflows.

Basics

Continuum relies on two core mechanisms: confidential computing and advanced sandboxing.

If confidential computing is new to you, you can learn about it in our Confidential Computing Wiki. In a nutshell, confidential computing is a hardware-based technology that keeps data encrypted in memory even during processing and makes it possible to verify the integrity of workloads.

Continuum uses confidential computing to shield the AI worker that processes your prompts. Essentially, the AI worker is a virtual machine (VM) that has access to an AI accelerator like the NVIDIA H100 and runs some AI code. The AI code loads an AI model onto the accelerator, pre-processes prompts and feeds them to the AI model. In the case of ai.confidential.cloud, the AI model is an open-source large language model (LLM). These basic concepts are all standard and independent of Continuum and confidential computing.

Continuum enables confidential computing for both the VM and the AI accelerator of the AI worker. With that, the AI worker is protected from the rest of the infrastructure. "The infrastructure" includes the hardware and software stack the AI worker runs on and also the people managing that stack. In the case of ai.confidential.cloud, "the infrastructure" is essentially the Azure cloud. Here, confidential computing ensures that the Azure cloud can't access the AI worker and your data.

Continuum ensures that your prompts and the corresponding responses are encrypted when they travel to and from the AI worker. In combination with the aforementioned confidential-computing mechanisms, this ensures that the infrastructure can only ever see encrypted data.

Protecting against more than the infrastructure

Even with the aforementioned security mechanisms in place, one concern remains: In practice, the AI code will likely come from a third party. How does one know that the AI code doesn't accidentally or maliciously leak prompts, e.g., by writing them to the disk or the network in plaintext?

In the case of ai.confidential.cloud, the AI code is put together by Edgeless Systems from open-source software like vLLM and includes an open-source AI model like Mistral 7B.

One solution to this problem would be to thoroughly review the AI code. However, this would usually be impractical as the AI code is likely to be complex and receive frequent updates.

Continuum addresses the problem by running the AI code inside a sandbox on the confidential computing-protected AI worker. In general terms, a sandbox is an environment that prevents an application from interacting with the rest of a system. In the case of Continuum, the AI code runs inside an adapted version of Google's gVisor sandbox. This ensures that the AI code has no means to leak prompts and responses in plaintext. The only thing the AI code is able to do is receive encrypted prompts, query the accelerator, and return encrypted responses.

With this architecture in place, your prompts are even protected from the entity that provides the AI code. In simplified terms, in the case of the well-known ChatGPT, this would mean that you wouldn't have to trust neither OpenAI (the company that provides the AI code) nor Microsoft Azure (the company that runs the infrastructure).

You can learn more about the protection that Continuum provides against different entities and actors in the security goals section.

Verifying the security mechanisms

The previous sections outlined how Continuum ensures that AI services like ai.confidential.cloud are protected against the infrastructure and the provider of the AI code.

A natural question to ask now is: How does an end user know if all of this is actually in place? The answer is: remote attestation. Remote attestation is one of the pillars of confidential computing. With remote attestation, it's possible to verify that a certain software is indeed running protected with confidential computing. Further, it's possible to verify precisely, based on cryptographic hashes, which software is running.

In Continuum, client software uses remote attestation to verify that the AI worker is running precisely the expected software, including the sandbox. The client software doesn't directly verify the remote-attestation statements from AI workers. Instead, workers are verified by an attestation service. The attestation service is also protected with confidential computing. The purpose of the attestation service is to simplify attestation if there's more than one AI worker. In such a case, the client software only has to verify the attestation service once and can then leave the verification of AI workers to the attestation service.

To take complexity off the end user and to allow usage in Browser environments, the user talks to a web service that verifies the attestation service on their behalf. Once verified, the web service exchanges the prompt encryption keys with the attestation service. The attestation service forwards the keys to the AI workers. The keys are then used to encrypt the communication between the client software and AI workers.

Verification policies

So far, it has been established that the client software verifies the attestation service, which in turn verifies the AI workers. This verification is based on configurable policies. In essence, a verification policy includes the expected software hashes and other parameters. This is standard procedure for confidential computing.

The client software's verification policy for the attestation service contains the expected hash of the attestation service's software and parameters, including the policy for the verification of AI workers. The client software will only accept an attestation service for which the hash matches. By extension, the client software then knows that the attestation service will only accept certain AI workers.

Security evidence in ai.confidential.cloud

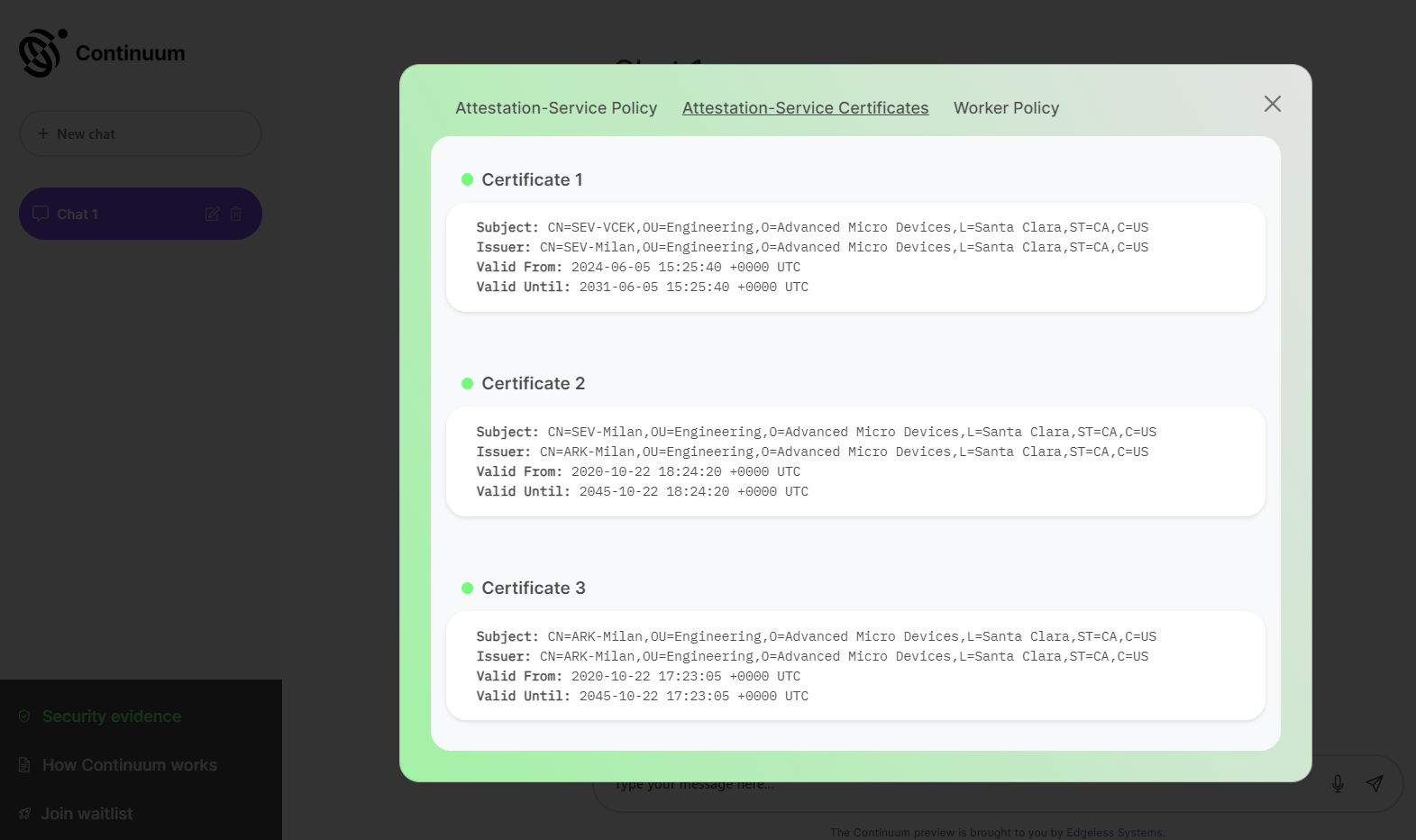

On ai.confidential.cloud, you can view some of the verification policies and corresponding evidence by selecting Security evidence. The tab Attestation-Service Policy shows parts of the policy used by the client software to verify the attestation service. The tab Attestation-Service Certificates summarizes the cryptographic certificates presented by the attestation service presented as part of the process. The tab Worker Policy shows parts of the policy used by the attestation service to verify the AI workers.

Future versions of ai.confidential.cloud will display more information on verification policies including actual software hashes, corresponding to openly published software components.

Client software

The previous section relied heavily on the term client software. In the context of Continuum, client software refers to the software that interacts with the attestation service and AI workers via Continuum’s API. This software is crucial in production environments for attestation verification and ensuring encrypted communication with the API.

For demonstration purposes, the web interface at ai.confidential.cloud provides a ChatGPT-like experience. In this case, the client software is the web server managed by Edgeless Systems, which interacts with your browser. However, in production, you would typically develop your own client software, leveraging Continuum's API directly to create a secure, tailored solution without relying on an intermediary web server.

The Continuum client SDK and the code of the ai.confidential.cloud web server will be released as open source in the near future.

Summary

This page explains the core concepts of Continuum from the perspective of the end user. In particular, it explains the purpose of the involved software components and their interactions. Crucially, it outlines the central remote-attestation mechanisms and the idea behind the sandboxing of the AI code. The main takeaway is that Continuum's design makes it possible to protect user prompts not only against the infrastructure, but also against the provider of the AI code and the service provider.

What this page doesn't cover are concepts and mechanisms that don't directly affect the end user. To get the full picture, head over to the architecture section.