Overview

Continuum is a ChatGPT-like GenAI API that enables secure processing of confidential prompts and replies using end-to-end encryption and confidential computing environments. For you as an API user, this means that your prompts to the AI—like "tell a joke"—remain private. The same applies to the AI's responses. Private in this context means inaccessible to anyone but you.

This overview is meant to give you a deeper technical understanding of how Continuum achieves such strong security properties.

Forming a trusted and end-to-end encrypted API

To guarantee the confidentiality of your prompts and replies, Continuum ensures that your data remains encrypted with key material inaccessible to any other party across the entire supply chain. This means that from the moment you make an API call at the client, through prompt processing by the AI model, and back to the client, your data stays protected. Additionally, Continuum ensures that none of the involved services can send your data elsewhere.

Continuum achieves this by

- ensuring that all services and processes along your API call are trusted and work as intended. Because if you can't trust all components along the way, how would you know that really a confidential channel was established?

- end-to-end encrypt your data along the whole supply chain with no means to decrypt your data for anyone but you.

- enforcing that third-party software runs in an isolated environment with no means to reach the outside world, ensuring your data isn't able to leave the intended supply chain.

For achieving 1. and 2., Continuum leverages confidential computing, while 3. is achieved by sandboxing.

How Continuum uses confidential computing

If confidential computing is new to you, check out our Confidential Computing Wiki to learn more. In a nutshell, confidential computing is a technology that keeps data encrypted in memory—even during processing—and allows you to verify the integrity of workloads. This is ultimately enforced through special hardware extensions in modern processors.

Continuum uses confidential computing to shield the AI worker that processes your prompts. Essentially, the AI worker is a virtual machine (VM) that has access to an AI accelerator like the NVIDIA H100 and runs some AI code. The AI code loads an AI model onto the accelerator, pre-processes prompts and feeds them to the AI model. In the case of Continuum, the AI model is an open-source large language model (LLM).

Continuum leverages confidential computing for both the virtual machine (VM) and the AI accelerator of the AI worker. This means that the model and code are protected from the rest of the infrastructure.

"The infrastructure" includes the entire hardware and software stack that the AI worker runs on, as well as the people managing that stack. For example, in the case of Continuum, "the infrastructure" is essentially the Azure cloud. With confidential computing, the Azure cloud can't access the AI worker or your data, ensuring your data remains secure and private.

Continuum ensures that your prompts and the corresponding responses are encrypted when they travel to and from the AI worker. In combination with the aforementioned confidential-computing mechanisms, this ensures that the infrastructure only ever sees encrypted data.

Protecting against more than the infrastructure

Even with the aforementioned security mechanisms in place, one concern remains: In practice, the AI code—meaning the code that loads the model and pre-processes prompts—will likely come from a third party. How do you know that the AI code doesn't accidentally or maliciously leak prompts, e.g., by writing them to the disk or the network in plaintext?

In the case of Continuum, the AI code is put together by Edgeless Systems from open-source software like vLLM and serves open-source AI models.

One solution to this problem would be to thoroughly review the AI code. However, this would usually be impractical as the AI code is likely to be complex and receive frequent updates.

Continuum addresses the problem by running the AI code inside a sandbox on the AI worker protected by confidential computing. In general terms, a sandbox is an environment that prevents an application from interacting with the rest of a system. In the case of Continuum, the AI code runs inside an adapted version of Google's gVisor sandbox. This ensures that the AI code has no means to leak prompts and responses in plaintext. The only thing the AI code is able to do is receive encrypted prompts, query the accelerator, and return encrypted responses.

By combining confidential computing and sandboxing, Continuum ensures that your prompts are even protected from the entity that provides the AI code. In simplified terms, in the case of the well-known ChatGPT, this would mean that you wouldn't have to trust neither OpenAI (the company that provides the AI code) nor Microsoft Azure (the company that runs the infrastructure).

You can learn more about the protection that Continuum provides against different entities and actors in the security properties section.

Verifying the security mechanisms

The previous sections outlined how Continuum is protected against the infrastructure and the provider of the AI code.

A natural question that comes up is: How can an end user trust that all of this is actually happening? The answer is remote attestation. Remote attestation is a cornerstone of confidential computing. It allows anyone to verify that the software is running in a protected environment using confidential computing. On top of that, it provides cryptographic proof of exactly which software is running, based on unique cryptographic hashes.

In Continuum, the client software (the continuum-proxy) uses remote attestation to ensure that the AI worker is running the exact software it’s supposed to, including the sandbox environment. But here’s the trick: the client software doesn’t directly check the AI worker’s attestation. Instead, that job is handled by an attestation service. The attestation service, which is also protected with confidential computing, simplifies the attestation process. The client software only needs to verify the attestation service once, and from there, the attestation service takes care of verifying the individual AI workers running in the GenAI backend.

To take complexity off the end user and to allow usage in browser environments, the user simply communicates with the continuum-proxy which handles attestation on their behalf.

End-to-end encryption

Once verified, the proxy exchanges the prompt encryption keys with the attestation service. The attestation service forwards the keys to the AI workers. The keys are then used to encrypt the communication between the client software and AI workers.

Verification policies

So far, we’ve established that the client software verifies the attestation service, and the attestation service in turn verifies the AI workers. This whole process runs on configurable policies. Essentially, a verification policy outlines the expected software hashes and other parameters a service should match to be deemed trustworthy. A service can then check another service's integrity by simply obtaining a fingerprint of its current state and configuration. By leveraging confidential computing, it's ensured that this fingerprint is a hardware-enforced, cryptographically binding proof, making it an unforgeable measurement of the service’s state (including code) and configuration. By checking this against the defined policy, the service is considered trustworthy or not.

For the client software, the verification policy includes the expected hash of the attestation service’s software along with other parameters, in particular the expected attestation service's policy for verifying AI workers that process the encrypted prompts. The client software will only trust an attestation service if its hash matches what’s expected. By extension, the client software then knows that the attestation service will only accept specific AI workers (as it has checked the integrity of the attestation service's policies for accepting AI workers).

Security evidence in ai.confidential.cloud

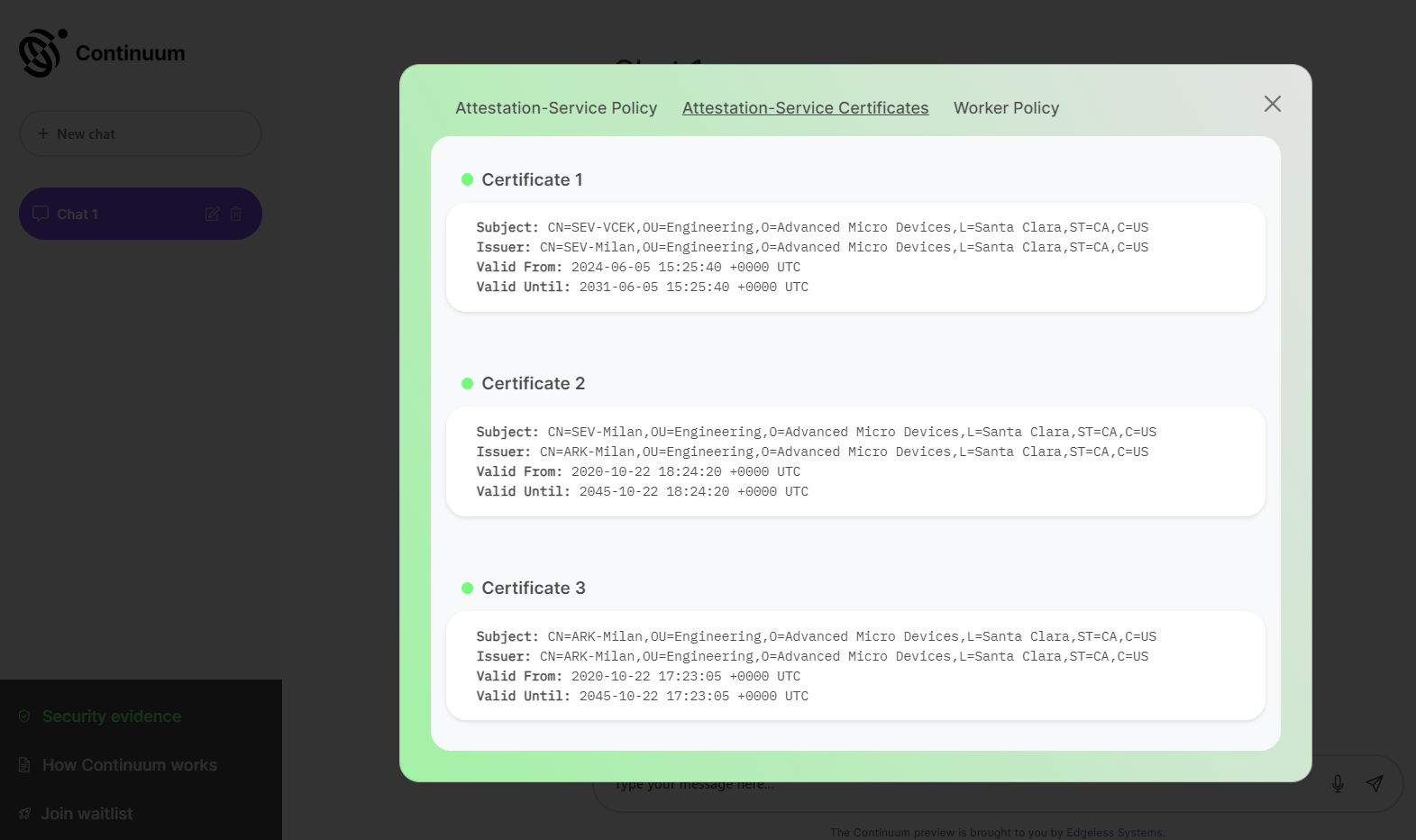

On ai.confidential.cloud, you can view the verification policies. The tab "Attestation-Service Policy" shows the policy used by the client software to verify the attestation service. The tab "Attestation-Service Certificates" summarizes the cryptographic certificates presented by the attestation service as part of the process. The tab "Worker Policy" shows parts of the policy used by the attestation service to verify the AI workers.

Client software

The previous section relied heavily on the term client software. In the context of Continuum, client software refers to the software that interacts with the attestation service and AI workers via Continuum’s API. This software is crucial in production environments for attestation verification and ensuring encrypted communication with the API.

For demonstration purposes, the web interface at ai.confidential.cloud provides a ChatGPT-like experience. In this case, the client software is the web server managed by Edgeless Systems, which interacts with your browser. However, in production, this web server would be replaced by the continuum-proxy on the client-side to exclude Edgeless Systems from the trust anchor.